Whether it’s diagnosing patients or driving cars, we want to know whether we can trust a person before assigning them a sensitive task. In the human world, we have different ways to establish and measure trustworthiness. In artificial intelligence, the establishment of trust is still developing.

In the past years, deep learning has proven to be remarkably good at difficult tasks in computer vision, natural language processing, and other fields that were previously off-limits for computers. But we also have ample proof that placing blind trust in AI algorithms is a recipe for disaster: self-driving cars that miss lane dividers, melanoma detectors that look for ruler marks instead of malignant skin patterns, and hiring algorithms that discriminate against women are just a few of the many incidents that have been reported in the past years.

Recent work by scientists at the University of Waterloo and Darwin AI, a Toronto-based AI company, provides new metrics to measure the trustworthiness of deep learning systems in an intuitive and interpretable way. Trust is often a subjective issue, but their research, presented in two papers, provides clear guidelines on what to look for when evaluating the scope of situations in which AI models can and can’t be trusted.

[Read:Â ]

How far do you trust machine learning?

For many years, machine learning researchers measured the trustworthiness of their models through metrics such as accuracy, precision, and F1 score. These metrics compare the number of correct and incorrect predictions made by a machine learning model in various ways. They can answer important questions such as if a model is making random guesses or if it has actually learned something. But counting the number of correct predictions doesn’t necessarily tell you whether a machine learning model is doing its job correctly.

More recently, the field has shown a growing interest in explainability, a set of techniques that try to interpret decisions made by deep neural networks. Some techniques highlight the pixels that have contributed to a deep learning model’s output. For instance, if your convolutional neural network has classified an image as “sheep,†explainability techniques can help you figure out whether the neural network has learned to detect sheep or if it is classifying patches of grass as sheep.

Explainability techniques can help you make sense of how a deep learning model works, but not when and where it can and can’t be trusted.

In their first paper, titled, “How Much Can We Really Trust You? Towards Simple, Interpretable Trust Quantification Metrics for Deep Neural Networks,†the AI researchers at Darwin AI and the University of Waterloo introduce four new metrics for “assessing the overall trustworthiness of deep neural networks based on their behavior when answering a set of questions.â€

While there are other papers and research work on measuring trust, these four metrics have been designed to be practical for everyday use. On the one hand, the developers and users of AI systems should be able to continuously compute and use these metrics to constantly monitor areas in which their deep learning models can’t be trusted. On the other hand, the metrics should be simple and interpretable.

In the second paper, titled, “Where Does Trust Break Down? A Quantitative Trust Analysis of Deep Neural Networks via Trust Matrix and Conditional Trust Densities,†the researchers introduce the “trust matrix,†a visual representation of the trust metrics across different tasks.

Overconfident of overcautious?

Consider two types of people, one who is overly confident in their wrong decisions and another who is too hesitant about the right decision. Both would be untrustworthy partners. We all like to work with people who have a balanced behavior: they should be confident about their right answers and also know when a task is beyond their abilities.

In this regard, machine learning systems are not very different from humans. If a neural network classifies a stop sign as a speed limit sign with a 99 percent confidence score, then you probably shouldn’t install it in your self-driving car. Likewise, if another neural network is only 30 percent confident it is standing on a road, then it wouldn’t help much in driving your car.

“Question-answer trust,†the first metric introduced by the researchers, measures an AI model’s confidence in its right and wrong answer. Like classical metrics, it takes into account the number of right and wrong predictions a machine learning model makes, but also factors in their confidence scores to penalize overconfidence and overcautiousness.

Say your machine learning model must classify nine photos and determine which ones contain cats. The question-answer trust metric will reward every right classification by a factor of its confidence score. So obviously, higher confidence scores will receive a higher reward. But the metric will also reward wrong answers by the inverse of the confidence score (i.e., 100% – confidence score). So a low confidence score in a wrong classification can earn as much reward as high confidence in the right classification.

What’s interesting about this metric is that, unlike precision and accuracy scores, it’s not about how many right predictions your machine learning model makes—after all, nobody is perfect.  It’s rather about how trustworthy the model’s predictions are.

Setting up a hierarchy of trust scores

Question-answer trust enables us to measure the trust level of single outputs made by our deep learning models. In their paper, the researchers expand on this notion and provide three more metrics that enable us to evaluate the overall trust level of a machine learning model.

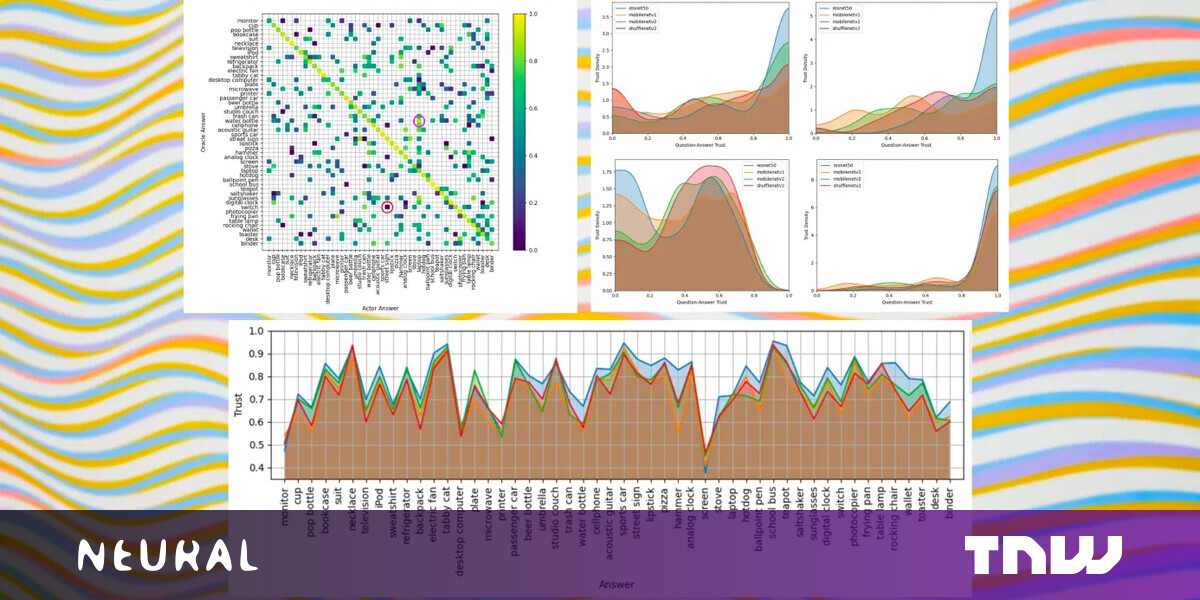

The first, “trust density,†measures the trust level of a model on a specific output class. Say you have a neural network trained to detect 20 different types of pictures, but you want to measure its overall trust level in the class “cat.†Trust density visualizes the distribution question-answer trust of the machine learning model for “cat†across multiple examples. A strong model should show higher density toward the right (question-answer trust = 1.0) and lower density toward the left (question-answer trust = 0.0).

The second metric, “trust spectrum,†further zooms out and measures the model’s trustworthiness across different classes when tested on a finite set of inputs. When visualized, the trust spectrum provides a nice overview of where you can and can’t trust a machine learning model. For instance, the following trust spectrum shows that our neural network, in this case ResNet-50, can be trusted in detecting teapots and school buses, but not in screens and monitors.

Finally, the “NetTrustScore†summarizes the information of the trust spectrum into a single metric. “From an interpretation perspective, the proposed NetTrustScore is fundamentally a quantitative score that indicates how well placed the deep neural network’s confidence is expected to be under all possible answer scenarios that can occur,†the researchers write.

The machine learning trust matrix

In their complementary paper, the AI researchers introduce the trust matrix, a visual aid that gives a quick glimpse of the overall trust level of a machine learning model. Basically, the trust matrix is a grid that maps the outputs of a machine learning model to their actual values and the trust level. The vertical axis represents the “oracle,†the known values of the inputs provided to the machine learning model. The horizontal axis is the prediction made by the model. The squares represent a test, with the X-axis representing the output by the model and the Y-axis representing its actual value. The color of each square shows its trust level, with bright colors representing high and dark colors representing low trust.

A perfect model should have bright-colored squares across the diagonal going from the top-left to the bottom-right, where predictions and ground truth cross paths. A trustworthy model can have squares that are off the diagonal, but those squares should be colored brightly as well. A bad model will quickly show itself with dark-colored squares.

For instance, the red circle represents a “switch†that was predicted as a “street sign†by the machine learning model with a low trust score. This means that the model was very confident it was seeing a street sign while in reality, it was looking at a switch. On the other hand, the pink circle represents a high trust level on a “water bottle†that was classified as a “laptop.†This means the machine learning model had provided a low confidence score, signaling that it was doubtful of its own classification.

Putting trust metrics to use

The hierarchical structure of the trust metrics proposed in the papers makes them very useful. For instance, when choosing a machine learning model for a task, you can shortlist your candidates by reviewing their NetTrustScores and trust matrices. You can further investigate the candidates by comparing their trust spectrums on multiple classes and further compare their performance on single classes on the trust density score.

The trust metrics will help you quickly find the best model for your task or find important areas where you can make improvements to your model.

Like many areas of machine learning, this is a work in progress. In their current form, the machine learning trust metrics only apply to a limited set of supervised learning problems, namely classification tasks. In the future, the researchers will be expanding on the work to create metrics for other kinds of tasks such as object detection, speech recognition, and time series. They will also be exploring trust in unsupervised machine learning algorithms.

“The proposed metrics are by no means perfect, but the hope is to push the conversation towards better quantitative metrics for evaluating the overall trustworthiness of deep neural networks to help guide practitioners and regulators in producing, deploying, and certifying deep learning solutions that can be trusted to operate in real-world, mission-critical scenarios,†the researchers write.

This article was originally published by Ben Dickson on TechTalks, a publication that examines trends in technology, how they affect the way we live and do business, and the problems they solve. But we also discuss the evil side of technology, the darker implications of new tech and what we need to look out for. You can read the original article here.

Published December 2, 2020 — 11:00 UTC